See updates through April 5, 2021, at end of post.

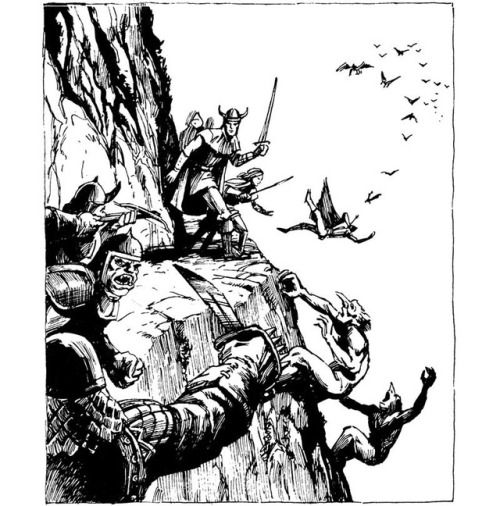

Fig. 1. An image flagged by artificial idiocy as “porn.” “One of those days, stuck on a ledge between hobgoblins, trolls, and whatever that is swooping down from the sky.” Image credited to Jeff Easley, AD&D Greyhawk Adventures, TSR, 1988, fair use.

This is what we are increasingly trusting our future to:

One of those days, stuck on a ledge between hobgoblins, trolls, and whatever that is swooping down from the sky (Jeff Easley, AD&D Greyhawk Adventures, TSR, 1988) https://t.co/vHUBaOMEEH

(this post was flagged as porn by @tumblr‘s stupid filter)

— Bernie Beats Trump (@doctorow) January 13, 2020

Notice the parenthetical. It would seem that Tumblr’s artificial intelligence idiocy porn filter hasn’t improved much since December 2018, when Louise Matsakis explained that

computers . . . detect whether groups of pixels appear similar to things they’ve seen in the past. Tumblr’s automated content moderation system might be detecting patterns the company isn’t aware of or doesn’t understand. “Machine learning excels at identifying patterns in raw data, but a common failure is that the algorithms pick up accidental biases, which can result in fragile predictions,” says Carl Vondrick, a computer vision and machine learning professor at Columbia Engineering. For example, a poorly trained AI for detecting pictures of food might erroneously rely on whether a plate is present rather than the food itself.[1]

A word you’ll see often with artificial idiocy is “probabilistic.” This means that the systems are relying on statistical methods—correlation—to reach their conclusions. Matsakis again:

WIRED tried running several Tumblr posts that were reportedly flagged as adult content through Matroid’s NSFW natural imagery classifier, including a picture of chocolate ghosts, a photo of Joe Biden, and one of Burstein’s patents, this time for LED light-up jeans. The classifier correctly identified each one as SFW, though it thought there was a 21 percent chance the chocolate ghosts might be NSFW. The test demonstrates there’s nothing inherently adult about these images—what matters is how different classifiers look at them.[2]

The different results likely result from different data sets used to “train” the porn filters,[3] which is to say we’re right back to what we said when I was a computer programmer in the late 1970s and early 1980s: “Garbage In, Garbage Out.”

But the first thing they teach you in any statistics class is “correlation does not prove causation.” One of my statistics texts cites an example where “weekly flu medication sales and weekly sweater sales for an area with extreme seasons would exhibit a positive association because both tend to go up in the winter and down in the summer.”[4] The text continues:

The problem is that the explanation for an observed relationship usually isn’t so obvious as it is in the medication and sweater sales example. Suppose the finding in an observational study is that people who use vitamin supplements get fewer colds than people who don’t use vitamin supplements. It may be that the use of vitamin supplements causes a reduced risk of a cold, but it is also easy to think of other explanations for the observed association. Perhaps those who use supplements also sleep more and it’s actually the sleep difference that causes the difference in the frequency of colds. Or, the users of vitamin supplements may be worried about good health and will take several different actions designed to reduce the risk of getting a cold.[5]

The problem here appears in both examples. It isn’t that sweater sales cause people to buy flu medicine or vice versa. It’s that people want to stay warm in the winter, in part to reduce the risk of falling ill, and that they buy flu medication once they do anyway. The risk and the response both occur at more or less the same time, so a correlation appears due to a third factor, cold weather, which stimulates actions intended to stay warm and is also presumed to increase susceptibility to the flu.

Similarly, vitamins only might reduce the risk of catching cold. Other healthy habits, getting enough sleep, for example, might also help.

In neither case has a causal relationship been shown between correlated variables. In one case, the causal variable is (presumably) winter. In another, it might be extra sleep or other preventative measures. But artificial idiocy relies on correlation anyway, even as statisticians jump up and down and scream, don’t do that!

The computer scientists’ response is to rely on “big data.” If you have a large sample size, this improves the confidence interval, allegedly reducing the probability of error. But the problem lies in the method itself, rather than in the confidence interval, as we see with Tumblr’s flagging of the image in Cory Doctorow’s tweet quoted above.

Long before artificial idiocy, the professor in my first methods class, Valerie Sue, warned against “data mining,” which she explained as searching large datasets for correlations. These correlations might be entirely spurious, she explained, because they may fail to correctly associate cause with actual causal variables. “Big data” is simply “big data mining,” the very thing she warned against, just on a massive scale.

Tumblr seems to have chosen its “big data” poorly for identifying porn and seems to have failed to fix this problem that is now over a year old. But the fallacy fundamentally remains the same: In fact, correlation proves absolutely nothing.

One of the reasons I had to leave the San Francisco Bay Area was that, nonetheless, it was apparent from billboards to be seen nearly everywhere I drove, that artificial idiocy is the new god. It is worshipped. It is trusted. It is taken as infallible.

From a human science perspective, this idolatry is appalling. Statistical data are quantitative and therefore superficial. I can’t emphasize this strongly enough: These correlations are thus between superficial variables. Our new priesthood consists of those who “operationalize” rich reality, reducing it to mere quantities. Our new prophets are those who report the results.

This is even more appalling from a systems theory perspective, in which linear causation is the exception rather than the rule: A rarely causes B; rather, A and B arise jointly, influencing each other, constraining each other in some ways, enhancing each other in other ways. Except it isn’t just A and B. It’s a multitude of factors in a relationship of mutual causality, creating an ecosystem, producing emergent properties, results that could not be forecast and cannot be reduced to the components of the system.[6]

Artificial idiocy is not merely a false god. It is a modern Satan, tempting us with correlations, leading us astray with superficial data, and taken as authoritative simply because it processes data beyond the capacity of mere humans.

Update, February 20, 2021: If there are ethical issues with artificial idiocy, it would seem that Google would like very much for us to not know about them.[7]

Update, February 23, 2021: This is satire, right? I had not been aware that Anthony Levandowski, who was the central figure in the dispute between Uber and Waymo over self-driving car technology, and who was pardoned by Donald Trump for stealing trade secrets from Waymo, had founded a church worshipping artificial intelligence idiocy. He dissolved it and donated its funds to the National Association for the Advancement of Colored People’s Legal Defense and Education Fund.[8] Everything I’ve heard and experienced, including a tangle with Twitter’s artificial idiots,[9] since writing the original post above only reinforces it, however the technology is being used in combination with networking to impressive effect with traffic signals in the Pittsburgh area.[10]

But here’s the thing: A statistical approach that constitutes artificial idiocy[11] probably is the best that can be done to improve traffic flow. Controls here will never be perfect and the Wall Street Journal headline about eliminating traffic congestion[12] exaggerates. The downside is minimal: In a vast majority of cases, the statistical approach will yield a benefit. In the minority, the effect is unlikely to be worse than with uncoordinated signals. Nobody’s going to die waiting for a red light, certainly any more than they do now.

Update, March 13, 2021: An article in the Columbia Journalism Review[13] reminded me of my own brush with Twitter’s artificial idiots, which were unleashed in the wake of Donald Trump’s inflammatory rhetoric which the company had officially tolerated[14] until his rhetoric led[15] to a coup attempt on the U.S. Capitol,[16] and it, along with other social media companies, didn’t.[17]

In my case I had commented on the difference between old and new designs for ceremonial keys to the City of Pittsburgh by noting that they were traditionally hung on lanyards around the honoree’s neck, something the newer design would not make possible. Twitter’s artificial idiots, I suspect, saw the words ‘hung’ and ‘neck’ and concluded that my tweet was abusive. My only appeal seems to have been to the very same artificial idiot.

Facebook’s approach has hardly been better, subjecting vast numbers of poorly paid folks to endless horrifying and utterly repugnant videos, in a mass production slave-driving approach.[18] Apart from the sheer inhumanity of this approach, people working these jobs are likely to devote every bit as much thought in their decision to censor or not as they are being paid for, which isn’t much, particularly as they are pushed to “produce” ever more.

The article on Facebook’s approach is instructive, however, in that it reveals something of the sheer scope of the problem. It’s huge,[19] probably much too much for an intelligent approach. Artificial idiocy, relying not on understanding but rather on statistical correlations,[20] seems to be the only alternative.

Which is not at all satisfactory. Which is leaving me pretty damned grumpy.

Update, March 15, 2021: Twitter suspended users mentioning a city in Tennessee named Memphis. Yes, really. It was a “bug,” they say.[21] No, Twitter, no. It is far more than a bug. It’s what you get for using artificial idiocy. It’s the very sort of thing I said would happen.[22]

Update, April 4, 2021: It’s really rather ludicrous.

Developers of artificial idiocy have been getting themselves all excited that they can read human minds. Except that the dataset they’re working from didn’t filter out the sequence of the images. And so the artificial idiots have been matching on the sequence because the images weren’t randomized.[23]

My god, they’re idiots. Both the machines and their developer/fans. And there is no excuse for it. None. The neurologists blame the artificial idiocy fan boys for not listening to neurologists,[24] but this is not rocket science. The fan boys should have figured this out for themselves.

Update, April 5, 2021: Institutional Review Boards (IRBs) are, I think, something researchers universally dread. They are empowered to stop a project dead in its tracks or to require changes and expected to do so on ethical grounds, though I understand they can advise as to research design issues as well. They’re powerful and fearsome.

They are also essential for inquiry involving human subjects and should also be seen as such for non-human animal subjects. And this isn’t just about being nice. Subjects, whether human or non-human, who feel abused may find ways to sabotage the results, contaminating the body of knowledge. I have worried about medical science on precisely these grounds.[25]

Reid Blackman proposes a similar approach for artificial idiocy.[26] Google[27] would do well to pay attention.

Update #2, April 5, 2021:

As the Supreme Court issued an order Monday declaring moot a lawsuit over [Donald] Trump’s blocking of some Twitter users from commenting on his feed, [Clarence] Thomas weighed in with a 12-page lament about the power of social media firms like Twitter. . . .

Thomas singled out the owners of Google and Facebook by name, arguing that the firms are currently unaccountable personal fiefdoms with massive power.

“Although both companies are public, one person controls Facebook (Mark Zuckerberg), and just two control Google (Larry Page and Sergey Brin),” Thomas wrote.

Thomas’ opinion amounts to an invitation to Congress to declare Twitter, Facebook and similar companies “common carriers,” essentially requiring them to host all customers regardless of their views. At the moment, the companies have sweeping authority to take down any post and to suspend or terminate any account.[28]

This issue just isn’t going away. It’s terribly problematic. On the one hand, I can hardly excuse Donald Trump’s firehose of hatred. On the other, social media companies are relying on artificial idiocy to solve their problem of how to control hate speech, which is a grievous mistake,[29] and I can’t disagree with Clarence Thomas’ complaint[30] that power over what I have called the “public square of the Internet”[31] is in unaccountable hands.[32] He’s right.

Update, October 9, 2022: Yes, this is old (from March). It just cropped up on my radar:

In 2019 it was revealed that the Dutch tax authorities had used a self-learning algorithm to create risk profiles in an effort to spot child care benefits fraud.

Authorities penalized families over a mere suspicion of fraud based on the system’s risk indicators. Tens of thousands of families — often with lower incomes or belonging to ethnic minorities — were pushed into poverty because of exorbitant debts to the tax agency. Some victims committed suicide. More than a thousand children were taken into foster care.[33]

And they were wrong. Not only were they wrong, but they were racist bigots about being wrong.[34] What the fuck did I tell you about artificial idiocy?[35] But absolute fucking idiots, whether artificial or human, will be absolute fucking idiots.

- [1]Louise Matsakis, “Tumblr’s Porn-Detecting AI Has One Job—and It’s Bad at It,” Wired, December 5, 2018, https://www.wired.com/story/tumblr-porn-ai-adult-content/↩

- [2]Louise Matsakis, “Tumblr’s Porn-Detecting AI Has One Job—and It’s Bad at It,” Wired, December 5, 2018, https://www.wired.com/story/tumblr-porn-ai-adult-content/↩

- [3]Louise Matsakis, “Tumblr’s Porn-Detecting AI Has One Job—and It’s Bad at It,” Wired, December 5, 2018, https://www.wired.com/story/tumblr-porn-ai-adult-content/↩

- [4]Jessica M. Utts and Robert F. Heckard, Mind on Statistics, 2nd ed. (Belmont, CA: Brooks/Cole, 2004), 157.↩

- [5]Jessica M. Utts and Robert F. Heckard, Mind on Statistics, 2nd ed. (Belmont, CA: Brooks/Cole, 2004), 157.↩

- [6]Fritjof Capra, The Web of Life: A New Scientific Understanding of Living Systems (New York: Anchor, 1996); Joanna Macy, Mutual Causality in Buddhism and General Systems Theory (Delhi, India: Sri Satguru, 1995).↩

- [7]David Benfell, “Having already fucked up in ousting an ethics researcher, Google doubles down,” Not Housebroken, February 19, 2021, https://disunitedstates.org/2021/02/19/having-already-fucked-up-in-ousting-an-ethics-researcher-google-doubles-down/↩

- [8]Anthony Levandowski, “The former Uber exec who was pardoned by Trump has closed his church that worshipped AI, donating its funds to the NAACP,” Business Insider, February 19, 2021, https://www.businessinsider.com/uber-google-ai-anthony-levandowski-trump-pardon-church-naacp-2021-2↩

- [9]Twitter’s artificial idiots flagged as abusive a reply I posted about ceremonial keys to the City of Pittsburgh. Such keys, as I recall, are traditionally hung on a lanyard about the honoree’s neck and I was pointing out that a newer design lacked the hole needed to string that lanyard. The bots likely saw the words ‘hung’ and ‘neck.’ The appeal process was, painfully obviously, to the very same artificial idiots, which is to say there was no appeal at all.↩

- [10]Henry Williams, “Artificial Intelligence May Make Traffic Congestion a Thing of the Past,” Wall Street Journal, June 26, 2018, https://www.wsj.com/articles/artificial-intelligence-may-make-traffic-congestion-a-thing-of-the-past-1530043151↩

- [11]David Benfell, “Our new Satan: artificial idiocy and big data mining,” Not Housebroken, February 20, 2021, https://disunitedstates.org/2020/01/13/our-new-satan-artificial-idiocy-and-big-data-mining/↩

- [12]Henry Williams, “Artificial Intelligence May Make Traffic Congestion a Thing of the Past,” Wall Street Journal, June 26, 2018, https://www.wsj.com/articles/artificial-intelligence-may-make-traffic-congestion-a-thing-of-the-past-1530043151↩

- [13]Salil Tripathi, “Twitter is caught between politics and free speech. I was collateral damage,” Columbia Journalism Review, March 12, 2021, https://www.cjr.org/first_person/twitter-is-caught-between-politics-and-free-speech-i-was-collateral-damage.php↩

- [14]Joseph Cox and Jason Koebler, “Why Won’t Twitter Treat White Supremacy Like ISIS? Because It Would Mean Banning Some Republican Politicians Too,” Vice, April 25, 2019, https://motherboard.vice.com/en_us/article/a3xgq5/why-wont-twitter-treat-white-supremacy-like-isis-because-it-would-mean-banning-some-republican-politicians-too; Elizabeth Dwoskin, “Twitter adds labels for tweets that break its rules — a move with potentially stark implications for Trump’s account,” Washington Post, June 27, 2019, https://www.washingtonpost.com/technology/2019/06/27/twitter-adds-labels-tweets-that-break-its-rules-putting-president-trump-companys-crosshairs/; Twitter, “World Leaders on Twitter,” January 5, 2018, https://blog.twitter.com/en_us/topics/company/2018/world-leaders-and-twitter.html↩

- [15]Devlin Barrett, “Trump’s remarks before Capitol riot may be investigated, says acting U.S. attorney in D.C.,” Washington Post, January 7, 2021, https://www.washingtonpost.com/national-security/federal-investigation-capitol-riot-trump/2021/01/07/178d71ac-512c-11eb-83e3-322644d82356_story.html; Andrew G. McCabe and David C. Williams, “Trump’s New Criminal Problem,” Politico, January 11, 2021, https://www.politico.com/news/magazine/2021/01/11/trumps-new-criminal-problem-457298↩

- [16]David Benfell, “The danger that remains,” Not Housebroken, January 22, 2021, https://disunitedstates.org/2021/01/07/the-danger-that-remains/; David Benfell, “Riot or insurrection? Lies or madness?” Not Housebroken, January 22, 2021, https://disunitedstates.org/2021/01/12/riot-or-insurrection-lies-or-madness/; David Benfell, “The State of the Disunion, 2021,” Not Housebroken, January 22, 2021, https://disunitedstates.org/2021/01/10/the-state-of-the-disunion-2021/; David Benfell, “The second farce,” Not Housebroken, February 14, 2021, https://disunitedstates.org/2021/02/14/the-second-farce/↩

- [17]Margi Murphy, “Facebook, Instagram and Twitter lock Donald Trump’s accounts after praise for Capitol Hill rioters,” Telegraph, January 7, 2021, https://www.telegraph.co.uk/technology/2021/01/06/calls-twitter-facebook-mute-donald-trump-violence-breaks-capitol/; Tony Romm and Elizabeth Dwoskin, “Trump banned from Facebook indefinitely, CEO Mark Zuckerberg says,” Washington Post, January 7, 2021, https://www.washingtonpost.com/politics/trump-resignations-25th-amendment/2021/01/07/e131ce10-50a3-11eb-bda4-615aaefd0555_story.html; Nitasha Tiku, Tony Romm, and Craig Timberg, “Twitter bans Trump’s account, citing risk of further violence,” Washington Post, January 8, 2021, https://www.washingtonpost.com/technology/2021/01/08/twitter-trump-dorsey/↩

- [18]Casey Newton, “Bodies in Seats,” Verge, June 19, 2019, https://www.theverge.com/2019/6/19/18681845/facebook-moderator-interviews-video-trauma-ptsd-cognizant-tampa↩

- [19]Casey Newton, “Bodies in Seats,” Verge, June 19, 2019, https://www.theverge.com/2019/6/19/18681845/facebook-moderator-interviews-video-trauma-ptsd-cognizant-tampa↩

- [20]David Benfell, “Our new Satan: artificial idiocy and big data mining,” Not Housebroken, February 23, 2021, https://disunitedstates.org/2020/01/13/our-new-satan-artificial-idiocy-and-big-data-mining/↩

- [21]Alyse Stanley, “Twitter Banned Me for Saying the ‘M’ Word: Memphis,” Gizmodo, March 15, 2021, https://gizmodo.com/twitter-banned-me-for-saying-the-m-word-memphis-1846474378↩

- [22]David Benfell, “Our new Satan: artificial idiocy and big data mining,” Not Housebroken, February 23, 2021, https://disunitedstates.org/2020/01/13/our-new-satan-artificial-idiocy-and-big-data-mining/↩

- [23]Purdue University, “Blind Spots Uncovered at the Intersection of AI and Neuroscience – Dozens of Scientific Papers Debunked,” SciTechDaily, April 3, 2021, https://scitechdaily.com/blind-spots-uncovered-at-the-intersection-of-ai-and-neuroscience-dozens-of-scientific-papers-debunked/↩

- [24]Purdue University, “Blind Spots Uncovered at the Intersection of AI and Neuroscience – Dozens of Scientific Papers Debunked,” SciTechDaily, April 3, 2021, https://scitechdaily.com/blind-spots-uncovered-at-the-intersection-of-ai-and-neuroscience-dozens-of-scientific-papers-debunked/↩

- [25]Harriet A. Washington, Medical Apartheid (New York: Doubleday, 2006).↩

- [26]Reid Blackman, “If Your Company Uses AI, It Needs an Institutional Review Board,” Harvard Business Review, April 1, 2021, https://hbr.org/2021/04/if-your-company-uses-ai-it-needs-an-institutional-review-board↩

- [27]Mitchell Clark and Zoe Schiffer, “After firing a top AI ethicist, Google is changing its diversity and research policies,” Verge, February 19, 2021, https://www.theverge.com/2021/2/19/22291631/google-diversity-research-policy-changes-timnet-gebru-firing; Ina Fried, “Google tweaks diversity, research policies following inquiry,” Axios, February 19, 2021, https://www.axios.com/google-tweaks-diversity-research-policies-following-inquiry-8baa6346-d2a2-456f-9743-7912e4659ca2.html; Alex Hanna, [Twitter thread], Thread Reader App, February 18, 2021, https://threadreaderapp.com/thread/1362476196693303297.html; Jeremy Kahn, “Google’s ouster of a top A.I. researcher may have come down to this,” Fortune, December 9, 2020, https://fortune.com/2020/12/09/google-timnit-gebru-top-a-i-researcher-large-language-models/; Zoe Schiffer, “Google fires second AI ethics researcher following internal investigation,” Verge, February 19, 2021, https://www.theverge.com/2021/2/19/22292011/google-second-ethical-ai-researcher-fired↩

- [28]Josh Gerstein, “Justice Thomas grumbles over Trump’s social media ban,” Politico, April 5, 2021, https://www.politico.com/news/2021/04/05/justice-clarence-thomas-trump-twitter-ban-479046↩

- [29]David Benfell, “Our new Satan: artificial idiocy and big data mining,” Not Housebroken, April 4, 2021, https://disunitedstates.org/2020/01/13/our-new-satan-artificial-idiocy-and-big-data-mining/↩

- [30]Josh Gerstein, “Justice Thomas grumbles over Trump’s social media ban,” Politico, April 5, 2021, https://www.politico.com/news/2021/04/05/justice-clarence-thomas-trump-twitter-ban-479046↩

- [31]David Benfell, “The public square of the Internet,” Not Housebroken, March 15, 2021, https://disunitedstates.org/2019/06/06/the-public-square-of-the-internet/↩

- [32]Josh Gerstein, “Justice Thomas grumbles over Trump’s social media ban,” Politico, April 5, 2021, https://www.politico.com/news/2021/04/05/justice-clarence-thomas-trump-twitter-ban-479046↩

- [33]Melissa Heikkilä, “Dutch scandal serves as a warning for Europe over risks of using algorithms,” Politico, March 29, 2022, https://www.politico.eu/article/dutch-scandal-serves-as-a-warning-for-europe-over-risks-of-using-algorithms/↩

- [34]Melissa Heikkilä, “Dutch scandal serves as a warning for Europe over risks of using algorithms,” Politico, March 29, 2022, https://www.politico.eu/article/dutch-scandal-serves-as-a-warning-for-europe-over-risks-of-using-algorithms/↩

- [35]David Benfell, “Our new Satan: artificial idiocy and big data mining,” Not Housebroken, April 5, 2021, https://disunitedstates.org/2020/01/13/our-new-satan-artificial-idiocy-and-big-data-mining/↩

Most jobs on LinkedIn require data mining skills. I have come up with 30 product enhancement ideas for existing products. I have offered to GIVE THE IDEA UP FRONT in exchange for a deal memo that the company would construct. Once that folly played out and I got no bites, I tried to GIVE AWAY product enhancement ideas to build up my testimonials. My bite rate was probably 2% for a FREE IDEA, with no strings attached if the idea was not liked. If the idea was liked, then all that would be required would be a LinkedIn testimonial.

It’s pratically impossible to share nowadays because everyone is too busy data mining.

Now this is embarrassing. My first comment disappeared after I posted it, so I left a follow up comment to explain how my experience was. I was about to click off when suddenly, my first comment became visible. So now I have left a third comment because I didn’t want to exit with an incorrect observation.

Our analytics and data mining world can’t even get the basics right. Try this experiment, post a link on facebook, let the corresponding image appear, then change the link. The image will not change. The image will remain with the first link even though the first link was replaced before posting. This Facebook defect has been around probably since day one. It recently cost me a LOT of hits for a youtube video I made. I chose to change the thumbnail after I had posted the video on youtube. Within a couple of days, the corresponding thumbnail on facebook went blank. Facebook had no way to recognize the new thumbnail. I ended up having to repost the topic with a picture insert and text link to match the new thumbnail. However, since most people click on the image, the image took them nowhere. I had posted a visual link to the new video but could not burn it into the image so I basically watched the view count drop by a remarkable amount even as what I had posted about made the news. If our data mining whizzes can’t even discover the SIMPLEST of errors regarding how their own pages work, what hope is there for data mining to get it right in other areas?

I moderate comments on this site myself. It may take some time for me to approve comments.

Pingback: I guess this is what lawyers are for (Update #2) – Irregular Bullshit

Pingback: Having already fucked up in ousting an ethics researcher, Google doubles down – Not Housebroken

Pingback: The California nightmare – Not Housebroken

Pingback: It’s gotta be satire, right? The “Way of the the Future Church” worshipped artificial idiocy. – Irregular Bullshit

Pingback: The faith of zealous skepticism – Not Housebroken

Pingback: Psychotic Monday (Update #3) – Irregular Bullshit

Pingback: The public square of the Internet – Not Housebroken

Pingback: About that alleged ‘labor shortage’ – Not Housebroken

Pingback: Fully vaccinated? You may relax – Irregular Bullshit

Pingback: The madmen who won’t go away – Irregular Bullshit

Pingback: The ‘hard problem’ of self-driving technology – Not Housebroken

Pingback: California omens and Washington gridlock – Not Housebroken

Pingback: Even more so for Gilead, high technology companies’ motto: Do be evil – Irregular Bullshit

Pingback: Absolute fucking idiots, whether artificial or human, will be absolute fucking idiots - Irregular Bullshit

Pingback: ‘The bird is freed’ and may now crash - Irregular Bullshit

Pingback: Attempts to automate the policing of hate speech are doomed to ludicrity - Not Housebroken

Pingback: Elon Musk’s Achilles’ heel - Not Housebroken

Pingback: Elon Musk’s ‘free speech’ - Not Housebroken

Pingback: About staying and ‘fighting’ on Twitter - Irregular Bullshit

Pingback: Coming to ‘The City:’ ‘Robotaxis’ - Irregular Bullshit

Pingback: When looking at quantitative research, remember teenaged boys in the 1950s and 1960s and their cars | Irregular Bullshit

Pingback: For regulating artificial idiocy, against regulating cryptography | Not Housebroken